Highest computation performance through parallelization

Parallelization is a straightforward way to speed up simulations and bring best value to customers. The idea is simple: instead of using a single CPU, just use dozens or even hundreds of CPUs to calculate in parallel. This, however, comes with its own set of issues…

The idea seems simple, but is actually difficult to implement when individual calculations depend on each other. If you think, for example, about an urban microclimate simulation, the temperature of a surface influences how fast water can evaporate from it, but the rate of evaporation also influences the temperature. It is very tricky to calculate one in parallel to the other, and in practice even well-known simulation programs fail to implement this. The consequence is that simulations are severely limited in size, level of detail and accuracy of computation, while taking a long time to complete.

The code that we write at Rheologic, however, is optimized for exactly that purpose. Using 32, 64, 128 or even more CPUs in parallel allows us to complete much larger projects in much greater detail much faster than otherwise possible, bringing the best value to our customers, while minimizing energy use. While others may take several weeks to complete a simulation, most of our wind / microclimate projects are done in under a week. While others might simply disregard surrounding buildings and omit details like balconies and small structures, we can capture many more details realistically over a larger area. This includes balconies, actual tree surfaces from lidar, thin structures like walls and the terrain plus any high structures that influence flow around the target area.

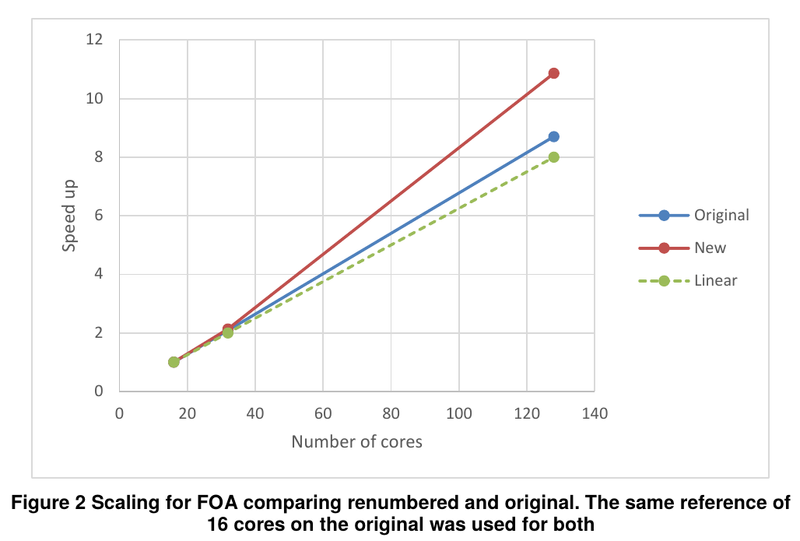

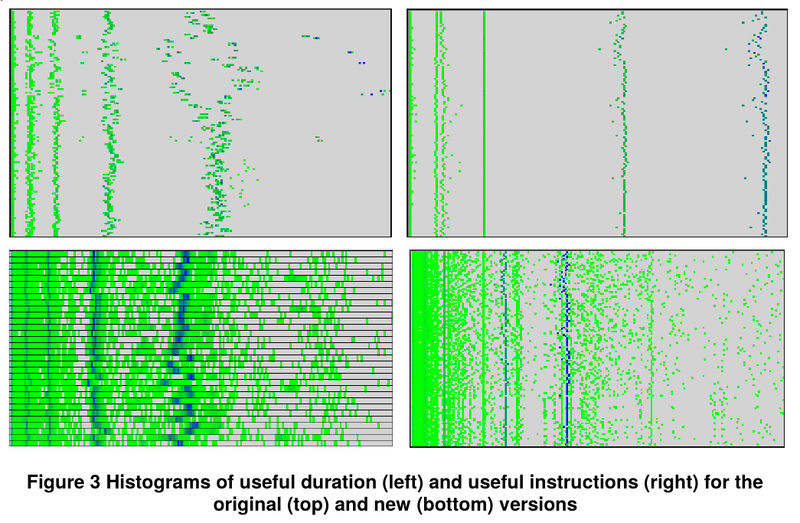

To ensure best value for our customers and minimal damage to the environment, we work with the European Center of Excellence in High-Performance-Computing (POP CoE) to optimize our code with state-of-the-art super computing analytics. Parallelizing computation always incurs overhead, because data needs to be exchanged between the CPUs that are working in parallel. While this is unavoidable, many software projects have the additional problem that the speedup becomes smaller and smaller the more CPUs are used.

The code that we at Rheologic used before we started to work with POP CoE did already perform better than that, and after analyzing the performance, we were able to speed this up even further, especially for more than 32 CPUs. This allows us to calculate wind and microclimate simulations for entire cities in just a couple of days and with minimum energy use!

If you want to know exactly how fast we can be with your project, just drop us a note!

Published: